Title

题目

Multidimensional Directionality-Enhanced Segmentation via large visionmodel

多维方向性增强分割通过大规模视觉模型实现

01

文献速递介绍

黄斑疾病影响全球约2亿人,已成为视力损害的主要原因之一。黄斑是视网膜中光感受器密度最高的区域,富含对光敏感的视锥细胞,其主要功能是色觉和高分辨率视觉。这一区域与中心视觉场直接相关,使得黄斑的健康状况对于整体视觉质量至关重要。任何黄斑的病理性改变都会导致视力下降,特别是中央视力的损失,从而显著降低生活质量。黄斑水肿是指黄斑区域视网膜层下的液体积聚,导致视网膜组织肿胀和厚度增加,对视网膜功能和视觉清晰度产生不利影响(Lim等,2012)。常见病因包括年龄相关性黄斑变性(Age-Related Macular Degeneration, AMD)(Manjunath等,2011)、视网膜静脉阻塞(Retinal Vein Occlusion, RVO)以及糖尿病视网膜病变(Diabetic Retinopathy, DR)(Esmaeelpour等,2011;Sim等,2013;Coscas等,2010)。光学相干断层扫描(Optical Coherence Tomography, OCT)通过基于时间差异的反射测量提供了视网膜的分层图像(Huang等,1991;Trichonas和Kaiser,2014),在黄斑的定性和定量分析中起着至关重要的作用(Wolf和Wolf-Schnurrbusch,2010)。然而,由于早期黄斑水肿表现的微小性和多样性,加之定量分析所需的高精度,仅依赖人工诊断面临重大挑战。在此背景下,利用深度学习(LeCun等,2015)和计算机视觉技术(Ma等,2023)对包括脉络膜(Nickla和Wallman,2010)和黄斑水肿(Tranos等,2004)在内的视网膜结构进行高精度分割和定量评估,可以显著提高诊断的准确性和效率。这些技术已在手术应用中得到了整合,可精准定位水肿和病变区域,有助于确定治疗部位并规划手术。然而,在OCT图像中分割视网膜液体仍面临挑战,包括视网膜液体病灶的高度异质性以及OCT成像固有的低对比度和噪声问题。由于医学图像的复杂性,通常需要医学专家进行广泛的手动标注,这一过程耗时且成本高(He等,2022)。因此,传统的监督学习模型常因标注数据集的有限性而受限,这也限制了其在其他任务中的可迁移性(Aljuaid和Anwar,2022)。近年来,基于Transformer架构的大规模视觉模型(Large Vision Model, LVM)在自然和遥感图像处理中展示了显著的通用性(Vaswani等,2017)。这些模型经过数十亿标注数据集的训练,展现出强大的零样本和小样本能力,尤其是在通用图像分割任务中(Kirillov等,2023)。然而,尽管LLMs如SAM和其他基于Transformer的大规模视觉模型在自然图像分割任务中表现卓越,但在CT、MRI和OCT等专用领域的医学图像中识别关键结构和区域时存在困难。这一不足源于训练过程中缺乏足够的医学影像数据(如放射影像)的暴露,突显了医学图像与自然图像成像原理之间的差异(Wu等,2023)。医学图像通常是特定物理信号(如X射线、超声波、MRI)与人体内部结构交互后的可视化结果,其图像密度、强度或颜色的变化反映了内部结构。因此,直接将这些通用模型应用于医学图像分割可能导致识别准确性的大幅下降,难以满足医学诊断对高精度的需求。针对以上挑战,本文提出了一种大规模视觉模型引导的视网膜分割框架(MD-DERFS),该框架由多维特征重编码单元(Multi-Dimensional Feature Re-Encoder Unit, MFU)、跨尺度方向洞察网络(Cross-scale Directional Insight Network, CDIN)以及谐波细节分割平衡损失函数(Harmonic Minutiae Segmentation Equilibrium Loss, HMSE)组成。为了解决大规模视觉模型训练数据集缺乏医学影像数据暴露的问题(Cheng等,2023),MFU利用基于方向一致性映射的方向先验提取机制和水肿纹理映射单元(Edema Texture Mapping Unit),增强模型识别特定纹理、形状和病理特征的能力。MFU还采用迭代注意力特征融合(iAFF),在不显著增加网络层数或参数的情况下关注跨尺度特征,从而提升捕捉医学影像中微小目标和细微病灶的能力。CDIN网络通过整合形态潜在特征放大单元(Morphological Latent Feature Amplification Unit, MLFA)和角向各向异性分解模块(Angular Anisotropic Decomposition Block, AAD),提供从局部到全局的多层次视角,有效弥补大规模视觉模型基于Transformer的编码器在捕获局部特征信息方面的不足(Kirillov等,2023)。此外,HMSE通过结合BCE、Dice和Poly的优势,有效缓解了黄斑水肿中的类别不平衡问题,提高了分割的准确性和鲁棒性。

本文主要贡献如下:

提出了MD-DERFS框架,通过多层次视角和方向先验提取机制,有效增强了编码器捕捉局部细节的能力,克服了大规模视觉模型轻量化解码器的局限性。

引入了MFU网络,通过将高维特征按通道切片划分为不同子集,分别处理后再融合。提出了CDIN,基于像素间的连通性对高维特征中的内容特征和场景特征进行解耦,从而解决因过多特征维度引起的信息冗余和通道干扰问题。

提出了HMSE损失函数,通过结合BCE、Dice和Poly,增强了对数据不平衡的学习能力,提高了OCT黄斑水肿检测的分割精度和鲁棒性。

本文在MacuScan-8K数据集上验证了所提方法的有效性,该数据集包含8000幅标注的SD-OCT图像(Spectralis HRA,德国Heidelberg Engineering公司)。实验表明,MD-DERFS在分割性能上优于现有方法,为医学影像分割任务中的高精度需求提供了新思路。

Aastract

摘要

Optical Coherence Tomography (OCT) facilitates a comprehensive examination of macular edema andassociated lesions. Manual delineation of retinal fluid is labor-intensive and error-prone, necessitating anautomated diagnostic and therapeutic planning mechanism. Conventional supervised learning models arehindered by dataset limitations, while Transformer-based large vision models exhibit challenges in medicalimage segmentation, particularly in detecting small, subtle lesions in OCT images. This paper introduces theMultidimensional Directionality-Enhanced Retinal Fluid Segmentation framework (MD-DERFS), which reducesthe limitations inherent in conventional supervised models by adapting a transformer-based large visionmodel for macular edema segmentation. The proposed MD-DERFS introduces a Multi-Dimensional FeatureRe-Encoder Unit (MFU) to augment the model’s proficiency in recognizing specific textures and pathologicalfeatures through directional prior extraction and an Edema Texture Mapping Unit (ETMU), a Cross-scaleDirectional Insight Network (CDIN) furnishes a holistic perspective spanning local to global details, mitigatingthe large vision model’s deficiencies in capturing localized feature information. Additionally, the framework isaugmented by a Harmonic Minutiae Segmentation Equilibrium loss (HMSE) that can address the challenges ofdata imbalance and annotation scarcity in macular edema datasets. Empirical validation on the MacuScan-8kdataset shows that MD-DERFS surpasses existing segmentation methodologies, demonstrating its efficacy inadapting large vision models for boundary-sensitive medical imaging tasks.

光学相干断层成像(Optical Coherence Tomography, OCT)能够全面检查黄斑水肿及相关病变。然而,视网膜液体的手动勾画过程劳动强度大且易出错,迫切需要一种自动化的诊断和治疗规划机制。传统的监督学习模型受限于数据集规模,而基于Transformer的大规模视觉模型在医学图像分割中也面临挑战,尤其是检测OCT图像中小而微妙的病灶。

本文提出了多维方向性增强视网膜液体分割框架(Multidimensional Directionality-Enhanced Retinal Fluid Segmentation, MD-DERFS),通过改进基于Transformer的大规模视觉模型,用于黄斑水肿分割,以克服传统监督学习模型的局限性。MD-DERFS引入了多维特征重编码单元(Multi-Dimensional Feature Re-Encoder Unit, MFU),通过方向先验提取增强模型对特定纹理和病理特征的识别能力。此外,框架还包括水肿纹理映射单元(Edema Texture Mapping Unit, ETMU)和跨尺度方向洞察网络(Cross-scale Directional Insight Network, CDIN),从局部到全局提供整体视角,弥补大规模视觉模型在捕获局部特征信息方面的不足。

同时,该框架通过谐波细节分割平衡损失函数(Harmonic Minutiae Segmentation Equilibrium Loss, HMSE)解决了黄斑水肿数据集中数据不平衡和标注稀缺的问题。在MacuScan-8k数据集上的实验证明,MD-DERFS优于现有的分割方法,展示了其在适应边界敏感的医学影像任务中应用大规模视觉模型的有效性。

Method

方法

This paper introduces MD-DERFS, a fundus OCT lesion segmentation framework that uses the generalization ability of large visionmodels for multi-dimensional directionality enhancement. We haveretained Segment Anything Model’s encoder in MD-DERFS, while focusing on the subsequent multi-dimensional orientation informationextraction and deep decoding of the encoder, as well as the lossfunction. In this section, we first introduce the overall framework ofMD-DERFS, and then introduce the MFU we propose to improve localfeature extraction. CDIN works with MLFA and AAD to capture globalcontext and complex local details to ensure comprehensive feature extraction. In addition, we introduce HMSE that combines the advantagesof BCE, Dice and the Poly, while addressing the severe categoryimbalance in the retinal edema dataset.

本文提出了MD-DERFS,一种用于眼底OCT病变分割的框架,通过利用大规模视觉模型的泛化能力实现多维方向性增强。我们在MD-DERFS中保留了Segment Anything Model(SAM)的编码器,同时重点关注编码器的多维方向信息提取、深度解码以及损失函数的设计。在本节中,我们首先介绍MD-DERFS的整体框架,然后详细说明我们提出的MFU模块如何改进局部特征的提取。CDIN与MLFA和AAD协同工作,捕获全局上下文和复杂的局部细节,以确保全面的特征提取。此外,我们引入了HMSE损失函数,该函数结合了BCE、Dice和Poly的优点,同时解决了视网膜水肿数据集中严重的类别不平衡问题。

Conclusion

结论

The precise segmentation of OCT images reveals subtle structuralchanges in the retina, instrumental in early detection of eye diseasessuch as macular degeneration, glaucoma, and diabetic retinopathy.Additionally, it plays a crucial role in surgeries, aiding in the exact localization of edematous and lesioned areas, thereby facilitating precisetreatment targeting and surgical planning. This study introduces threemajor enhancements in the task of retinal edema segmentation fromfundus OCT images.We propose MD-DERFS to exploit further the high-level semanticinformation encoded by the large vision model image encoder, forapplication in specialized medical image segmentation domains. Secondly, the MFU was integrated into MD-DERFS, effectively utilizingimage priors and designing an Edema Texture Mapping Unit to better adapt to the unique morphology of retinal edema, enhancing themodel’s ability to capture information about small, localized lesions.Moreover, the CDIN structure was incorporated into MD-DERFS, improving the extraction of directionally relevant information in imagesand providing a multi-level perspective from local to global. Thisallowed the network to simultaneously capture detailed features oflesions and their overall spatial layout, leading to more accurate andreliable segmentation.Furthermore, we introduce HMSE combining BCE, Dice, and Poly,aiming to enhance the model’s learning of edema image information ondatasets with class imbalance, and optimizing the model’s pixel-levelsegmentation accuracy and overall shape recognition in edematousregions.MD-DERFS, by integrating Transformer-based large visual models,overcomes the constraints traditional supervised learning methods facein medical image segmentation tasks due to dataset scale and quality.The innovation of this framework lies in its successful adaptation of thepowerful visual comprehension ability of large models to the uniquedomain of medical imaging. Specifically, its enhanced structure significantly improves the model’s ability to capture key details in fundus OCTimages. The results demonstrate that our method not only transcendsthe limitations of data dependency but also significantly enhancethe recognition accuracy of small, localized lesion areas, providingreliable technical support for the precise diagnosis and treatment ofeye diseases.

OCT图像的精确分割能够揭示视网膜中微妙的结构变化,对于黄斑变性、青光眼和糖尿病视网膜病变等眼部疾病的早期检测至关重要。此外,它在手术中也发挥着关键作用,有助于精确定位水肿和病变区域,从而促进精准的治疗目标定位和手术规划。

本研究在眼底OCT图像的视网膜水肿分割任务中引入了三大改进:提出了MD-DERFS框架 该框架充分利用了大规模视觉模型编码器中高层语义信息,将其应用于医学影像分割的专门领域。通过这一创新,MD-DERFS有效提升了模型对医学图像的适应性和语义理解能力。集成了MFU模块 MFU模块通过设计水肿纹理映射单元(Edema Texture Mapping Unit),充分利用图像的先验信息,更好地适应视网膜水肿的特有形态学特征,增强了模型捕捉小型、局部病灶信息的能力。引入CDIN结构 CDIN结构改善了图像中方向相关信息的提取能力,同时提供从局部到全局的多层次视角,使网络能够同时捕获病灶的细节特征及其整体空间布局,从而实现更精准、更可靠的分割效果。

此外,我们引入了HMSE损失函数,结合了BCE、Dice和Poly的优点,旨在增强模型在类别不平衡数据集上对水肿图像信息的学习能力,并优化模型在水肿区域的像素级分割精度和整体形状识别能力。

MD-DERFS通过整合基于Transformer的大规模视觉模型,克服了传统监督学习方法在医学影像分割任务中因数据规模和质量受限而面临的挑战。该框架的创新在于成功将大模型强大的视觉理解能力适配到医学影像这一独特领域。其增强的结构显著提高了模型在眼底OCT图像中捕捉关键细节的能力。实验结果表明,我们的方法不仅超越了数据依赖的局限性,还显著提升了小型局部病灶区域的识别精度,为眼部疾病的精准诊断与治疗提供了可靠的技术支持。

Results

结果

A dataset named Macular Edema Enhanced Retinal OCT DatasetMacuScan-8k, comprises 8000 annotated B-scan SD-OCT images (Spectralis HRA, Heidelberg Engineering, Germany), obtained from patientsdiagnosed with macular edema at the Zhejiang Provincial People’s Hospital over a five-year period from May 1, 2016, to December 31, 2021.The significant volume and superior annotation quality of MacuScan-8k mark a substantial enhancement over existing publicly availabledatasets in terms of data quantity and collection efforts.The data encompasses retinal OCT scans of 119 patients diagnosedwith macular hole, totaling 126 sequences with each sequence containing 17 to 115 slices. OCT volume scans were centered around themacula, covering an area of 6.0 × 4.5 millimeters (20◦ × 15◦ ), witha resolution of 496 × 512 pixels. The average axial, transverse, andazimuthal pixel spacing were 3.87 μm, 11.50 μm, and 120.96 μm, respectively. All scans originated from the same equipment, and any datawith severe artifacts or significantly reduced signal strength impedingthe recognition of retinal interfaces were excluded.The labeling phase, conducted from June to December 2021, involved five experienced radiologists who manually annotated the retinal, macular edema, and macular hole in each B-scan of the OCTvolumes using segmentation editor software. Following the initial annotation, two senior retinal experts reviewed the results. This reviewprocess included multiple rounds of feedback and revision to ensurethe accuracy of the annotations. In the diagnosis of fundus diseases, IRFrefers to the accumulation of fluid in the retinal layer, which is usuallyassociated with retinopathy such as macular edema. SRF indicates thepresence of fluid under the retinal layer, which is commonly observedin conditions such as choroidal neovascularization and can lead tovisual impairment. The annotation focused on classifying IRF and SRFas one category to enhance segmentation precision in the network(Fig. 9).

一个名为黄斑水肿增强视网膜OCT数据集(Macular Edema Enhanced Retinal OCT Dataset, MacuScan-8k)的数据集包含8000幅标注的B扫描SD-OCT图像(由德国Heidelberg Engineering公司的Spectralis HRA设备采集),这些图像来自浙江省人民医院的黄斑水肿患者,在2016年5月1日至2021年12月31日的五年期间收集。相比现有公开数据集,MacuScan-8k在数据量和采集工作方面实现了显著提升,其数据规模和高质量标注显著增强了数据的实用性。该数据集包含119例黄斑裂孔患者的视网膜OCT扫描,总计126个序列,每个序列包含17到115张切片。OCT体积扫描以黄斑为中心,覆盖6.0 × 4.5毫米的区域(20° × 15°),分辨率为496 × 512像素。平均轴向、横向和方位像素间距分别为3.87 μm、11.50 μm和120.96 μm。所有扫描均来源于相同设备,任何由于严重伪影或信号强度显著降低而影响视网膜界面识别的数据均被剔除。标注阶段于2021年6月至12月进行,由五位经验丰富的放射科医师手动标注OCT体积中每一幅B扫描图像的视网膜、黄斑水肿及黄斑裂孔,使用分割编辑软件完成标注。初始标注后,两位资深视网膜专家对结果进行了审核,审核过程中包括多轮反馈和修订,以确保标注的准确性。

在眼底疾病的诊断中,视网膜内液体(IRF)指的是视网膜层内液体的积聚,通常与黄斑水肿等视网膜病变相关。视网膜下液体(SRF)指的是视网膜层下液体的存在,常见于脉络膜新生血管化等疾病,可能导致视力损害。在标注过程中,将IRF和SRF归为同一类别,以提升网络分割的精度(见图9)。

Figure

图

Fig. 1. From left to right: original OCT images, SAM segmentation outputs, Fine-tunedSAM segmentation outputs on MacuScan-8K, and MD-DERFS segmentation outputs posttraining on MacuScan-8K. The segmentation of the lesion area in the red frame requiresmore fine-grained local feature knowledge. As shown in the figure, the MD-DERFSsignificantly improves the OCT edema segmentation.

图1。 从左到右依次为:原始OCT图像、SAM分割结果、在MacuScan-8K上微调的SAM分割结果,以及在MacuScan-8K上训练后的MD-DERFS分割结果。红色框中的病变区域分割需要更精细的局部特征知识。如图所示,MD-DERFS显著提升了OCT水肿的分割效果。

Fig. 2. The overall framework diagram of MD-DERFS, the fundus OCT image will first be mapped to the feature space by a pre-trained SAM encoder, resulting in five shapeimage embeddings (𝐸1 -𝐸5 ) with size 255 × 64 × 64. 𝐸1 is input into the MFU, where 𝐸1 is sliced along channels to fully exploit the prior knowledge of the OCT image, solvingthe problem that the large SAM model pre-trained lacks specific medical knowledge; 𝐸2 -𝐸5 are input into the CDIN, solving the problem that the visual large model based onTransformer structure is insufficient in extracting local fine features of the image, and the fine features obtained from the two modules are fused by iAFF for attention featurefusion to obtain the segmentation result

图2。 MD-DERFS的整体框架示意图。首先,将眼底OCT图像通过预训练的SAM编码器映射到特征空间,生成五个形状-图像嵌入(𝐸1 -𝐸5),尺寸为255 × 64 × 64。𝐸1被输入到MFU中,在通道上进行切片,以充分利用OCT图像的先验知识,解决大规模SAM模型因缺乏特定医学知识而导致的不足;𝐸2 -𝐸5则输入到CDIN中,解决了基于Transformer结构的大规模视觉模型在提取图像局部精细特征时的不足问题。来自这两个模块的精细特征通过iAFF进行注意力特征融合,最终生成分割结果。

Fig. 3. Diagram structure of the MFU. The MFU employs feature slicing to segmentthe input into distinct groups. Each group is processed by the Edema Texture MappingUnit to extract directional prior features. Subsequent fusion via the iAFF optimizessegmentation accuracy while maintaining the framework’s complexity at a manageablelevel

图3。 MFU(多维特征重编码单元)的结构示意图。MFU采用特征切片,将输入划分为不同的组。每个组通过水肿纹理映射单元(Edema Texture Mapping Unit)处理,以提取方向性先验特征。随后,通过iAFF(迭代注意力特征融合)进行特征融合,在优化分割精度的同时保持框架复杂性在可控范围内。

Fig. 4. The Edema Texture Mapping Unit mentioned in the overview, where LayerNormalization means Layer Normalization, the MFU’s sliced features are fed into theEdema Texture Mapping Unit to achieve a comprehensive multi-angle analysis of theimage in terms of spatial and textural nuances.

图4。 概述中提到的水肿纹理映射单元(Edema Texture Mapping Unit)结构示意图,其中LayerNormalization表示层归一化。MFU切片后的特征被输入到水肿纹理映射单元中,以实现对图像在空间和纹理细微差异方面的全面多角度分析。

Fig. 5. The network structure diagram of CDIN, where image embeddings 𝐸i obtainedfrom the SAM encoder. The module features multiple stages of deep feature extractionusing AAD and MLFA modules.

图5。 CDIN(跨尺度方向洞察网络)的网络结构示意图,其中图像嵌入 𝐸i 由SAM编码器生成。该模块通过AAD(角向各向异性分解模块)和MLFA(形态潜在特征放大单元)实现多阶段深度特征提取。

Fig. 6. The structure diagram of MLFA. The module consists of two stages, each incorporating a dilated convolution layer followed by a Batch Normalization and a ReLU activationfunction. Between the two stages, an upsampling layer is employed to increase the spatial resolution of the feature maps. This module aims to capture multi-scale features effectivelyby leveraging dilated convolutions and subsequent upsampling

图6。 MLFA(形态潜在特征放大单元)的结构示意图。该模块包含两个阶段,每个阶段均由一个膨胀卷积层(dilated convolution layer)、一个批量归一化层(Batch Normalization)以及一个ReLU激活函数组成。在两个阶段之间,使用一个上采样层(upsampling layer)来提高特征图的空间分辨率。该模块旨在通过利用膨胀卷积和后续的上采样,有效捕获多尺度特征。

Fig. 7. The structure diagram of AAD. The module consists of two main components:the Scene Encoder and the Content Encoder. The Scene Encoder primarily includestwo dilated convolutions and an SPP module. The Content Encoder comprises dilatedconvolutions, Batch Normalization, and ReLU activation functions. The outputs fromthese encoders are combined and processed through a sigmoid function to formrelational features 𝐸𝑠𝑐 , which are then integrated to produce the final 𝐸𝐴𝐴𝐷.

图7。 AAD(角向各向异性分解模块)的结构示意图。该模块由两个主要部分组成:场景编码器(Scene Encoder)和内容编码器(Content Encoder)。场景编码器主要包括两个膨胀卷积层(dilated convolutions)和一个SPP模块(空间金字塔池化模块)。内容编码器由膨胀卷积层、批量归一化层(Batch Normalization)和ReLU激活函数组成。这两个编码器的输出经过sigmoid函数处理生成关系特征 𝐸𝑠𝑐,随后进行整合以生成最终的 𝐸𝐴𝐴𝐷。

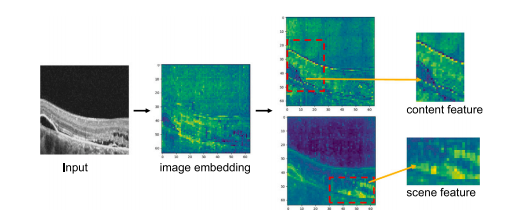

Fig. 8. Depth space feature visualization. After being encoded by SAM’s encoder, theimage embedding contains a lot of noise information. By separating the content featuresand scene features in the depth space using CDIN, further depth feature extraction isperformed using AAD, resulting in content features that include clear boundaries, textures, etc., and scene features that include edema volume and background information,which are very helpful for edema segmentation.

图8。 深度空间特征可视化。通过SAM编码器编码后,图像嵌入中包含大量噪声信息。通过CDIN在深度空间中分离内容特征和场景特征,进一步利用AAD进行深度特征提取,从而生成包含清晰边界、纹理等的内容特征,以及包含水肿体积和背景信息的场景特征。这些特征对水肿分割非常有帮助。

Fig. 9. The figure shows part of the dataset image, where the region selected in the blue box is IRF and the region selected in the red box is SRF. The labels F1 through F6 areused for reference and identification of each frame.

图9。 图中展示了数据集的一部分图像,其中蓝色框选区域为视网膜内液体(IRF),红色框选区域为视网膜下液体(SRF)。标签F1至F6用于参考和标识每一帧图像。

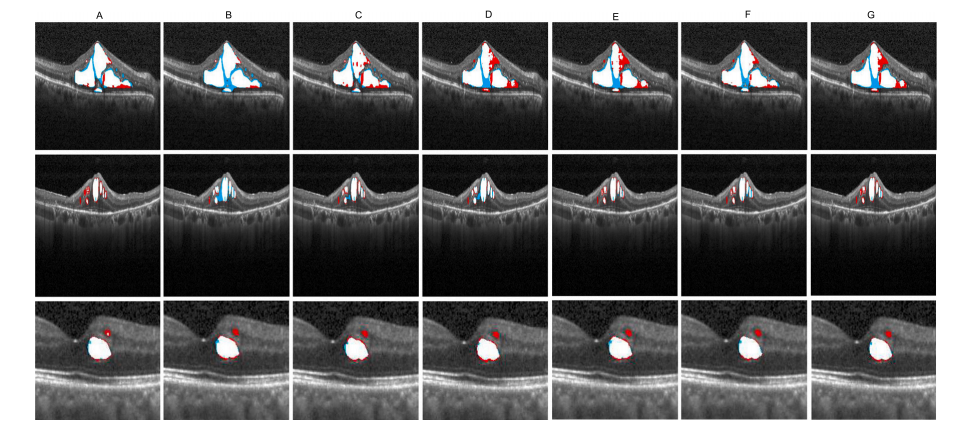

Fig. 10. Comparison of segmentation effects. The original OCT image is shown in thefigure, the manually annotated GroundTruth is represented by a red mask, and theresult of automatic segmentation using the model is represented by a blue mask, asshown in the figure is the MD-DERFS segmentation result, the resulting mask of twodifferent colors is merged into one image, and the overlapping part is represented bywhite.

图10。 分割效果对比。图中显示了原始OCT图像,手动标注的真值(GroundTruth)用红色掩膜表示,模型自动分割结果用蓝色掩膜表示。图中展示的是MD-DERFS的分割结果,两种不同颜色的掩膜合并为一幅图像,重叠部分用白色表示。

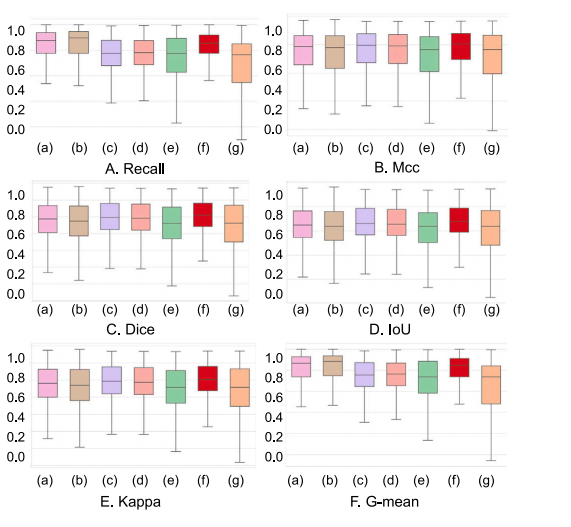

Fig. 11. Box plots comparing six indicators with the baseline: Recall, Mcc, Dice, IoU, Kappa, and G-mean. On the 𝑥-axis, the model is labeled as: (a) U-Net; (b) AttUnet; ©SegNet; (d) FCN-8s; (e) Ene

图11。 箱线图比较六个指标与基线的表现:召回率(Recall)、马修斯相关系数(Mcc)、Dice系数(Dice)、交并比(IoU)、Cohen’s Kappa系数(Kappa)和几何均值(G-mean)。在𝑥轴上,模型的标记为:(a)U-Net;(b)AttUnet;(c)SegNet;(d)FCN-8s;(e)Ene。

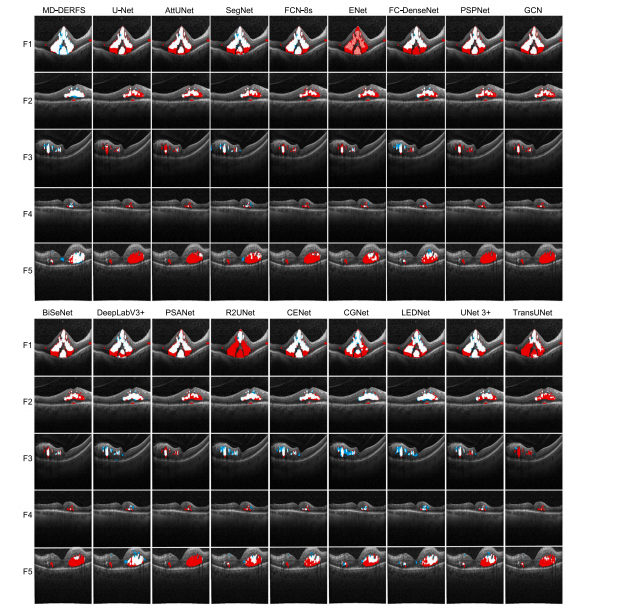

Fig. 12. Visual comparison of the evaluation results of MD-DERFS and 17 other segmentation methods on the MacuScan-8k dataset. We selected 6 representative images fordisplay. In these images, GroundTruth is shown in red, the mask of the model segmentation is shown in blue, and the coincident parts are white to indicate that the segmentationis correct. In instances F1 and F5, the edema regions are indistinguishably segmented from the adjacent retinal tissue, highlighting MD-DERFS’s exceptional inferential strength forprecise segmentation. Additionally, MD-DERFS consistently achieves accurate segmentation of the edema areas and their peripheries in instances F2, F3, F4, and F6.

图12。 MD-DERFS与其他17种分割方法在MacuScan-8k数据集上的评价结果可视化对比。我们选择了6幅具有代表性的图像进行展示。图中,手动标注的真值(GroundTruth)以红色显示,模型分割的掩膜以蓝色显示,重叠部分以白色表示,表明分割正确。在实例F1和F5中,水肿区域与相邻视网膜组织之间的边界被精确区分,突显了MD-DERFS在精准分割上的卓越推理能力。此外,在实例F2、F3、F4和F6中,MD-DERFS始终能够准确分割水肿区域及其周边区域。

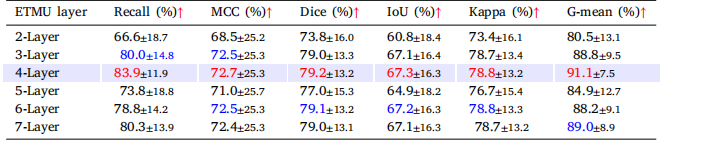

Fig. 13. Box plots showing six indicators from the ablation study on Edema TextureMapping Units. On the 𝑥-axis, the model is labeled as: (a) MFU 2-Layer; (b) MFU3-Layer; © MFU 4-Layer; (d) MFU 5-Layer; (e) MFU 6-Layer; (f) MFU 7-Layer.

图13。 箱线图展示了关于水肿纹理映射单元(Edema Texture Mapping Units)的消融研究中的六个指标。在𝑥轴上,模型标签分别为:(a)MFU 2层;(b)MFU 3层;(c)MFU 4层;(d)MFU 5层;(e)MFU 6层;(f)MFU 7层。

Fig. 14. Box plots showing six indicators from the ablation study on the key frameworkmodules. On the 𝑥-axis, the model is labeled as: (a) SAM; (b) SAM+CDIN+iAFF; ©SAM+CDIN+MFU-6L; (d) SAM+CDIN+MFU-4L; (e) CDIN+MFU; (f) SAM+CDIN+MFU-4L+iAFF; (g) SAM+ CDIN+MFU-6L+iAFF.

图14。 箱线图展示了关于关键框架模块的消融研究中的六个指标。在𝑥轴上,模型标签分别为: (a)SAM; (b)SAM+CDIN+iAFF; (c)SAM+CDIN+MFU-6L; (d)SAM+CDIN+MFU-4L; (e)CDIN+MFU; (f)SAM+CDIN+MFU-4L+iAFF; (g)SAM+CDIN+MFU-6L+iAFF。

Fig. 15. Box plots showing six indicators from the ablation study on loss. On the𝑥-axis, the model is labeled as: (a) 𝛾𝛽-Focus; (b) 𝛾𝛼-Focus; © 𝛼𝛽-Focus; (d) 𝛽𝛼-Focus; (e)𝛽𝛾-Focus; (f) Uniform; (g) 𝛼-Focus

图15。 箱线图展示了关于损失函数的消融研究中的六个指标。在𝑥轴上,模型标签分别为: (a)𝛾𝛽-Focus; (b)𝛾𝛼-Focus; (c)𝛼𝛽-Focus; (d)𝛽𝛼-Focus; (e)𝛽𝛾-Focus; (f)Uniform; (g)𝛼-Focus。

Fig. 16. Comparison of the structure of the ablation experiment on the model structure of MD-DERFS. A-E represent MD-DERFS, SAM+CDIN+iAFF, SAM+CDIN+MFU-6L,SAM+CDIN+MFU-4L, CDIN+MFU, SAM+CDIN+MFU-4L+iAFF, SAM+CDIN+MFU-6L+iAFF, red indecate GroundTurth, blue denote the segmentation result of each model, andthe overlapping is represented by white.

图16。 关于MD-DERFS模型结构的消融实验对比示意图。A-E分别表示以下模型:MD-DERFS、SAM+CDIN+iAFF、SAM+CDIN+MFU-6L、SAM+CDIN+MFU-4L、CDIN+MFU、SAM+CDIN+MFU-4L+iAFF,以及SAM+CDIN+MFU-6L+iAFF。图中红色表示真值(GroundTruth),蓝色表示各模型的分割结果,重叠部分以白色表示。

Table

表

Table 1Hyperparameter configurations utilized throughout the experimental protocol

表1 实验过程中使用的超参数配置

Table 2Experimental results of the proposed method and 17 previous segmentation methods on the MacuScan-8k dataset. The best value of the experimental results is highlighted in red,and the second best value is highlighted in blue.

表2本文提出的方法与17种现有分割方法在MacuScan-8k数据集上的实验结果。实验结果中,最佳值以红色标出,次优值以蓝色标出。

Table 3The table shows the results of the ablation experiments that were gradually added to our innovative network on the basis of large vision model. The red data is the best resultof the corresponding index, whereas the blue data is the secend.

表3本表展示了基于大规模视觉模型逐步添加到我们创新网络中的消融实验结果。红色数据表示相应指标的最佳结果,蓝色数据表示次优结果。

Table 4The table aims to explore the impact of various hyperparameter configurations on our loss function on model performance.Red data in the table denotes the optimal results for the respective metrics, whereas blue signifies the second-best outcomes.

表4本表旨在探讨不同超参数配置对损失函数和模型性能的影响。表中红色数据表示相应指标的最佳结果,蓝色数据表示次优结果。

Table 5The main segmentation metrics of the model under five different numbers of Edema Texture Mapping Unitlayers, namely 2-Layer, 3-Layer, 4-Layer, 5-Layer, 6-Layer, 7-Layer, are presented. The data in red is thebest result of the corresponding index, and the blue data is the second best result.

表5本表展示了模型在不同数量的水肿纹理映射单元层(Edema Texture Mapping Unit Layers)下的主要分割指标,包括2层、3层、4层、5层、6层和7层的配置。表中红色数据表示相应指标的最佳结果,蓝色数据表示次优结果。