Title

题目

Improving cross-domain generalizability of medical image segmentation using uncertainty and shape-aware continual test-time domain adaptation

提高医学图像分割跨领域泛化能力:基于不确定性和形状感知的持续测试时域适应

01

文献速递介绍

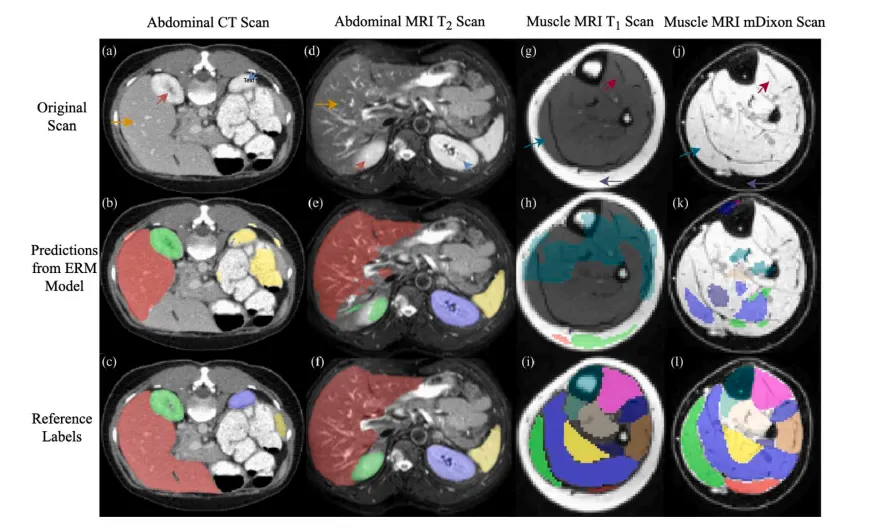

近年来,深度神经网络在医学图像分析等多个图像处理与计算机视觉领域取得了前所未有的进展(Litjens等,2017)。特别是,卷积神经网络(CNN)等模型在表征学习能力方面表现出色,达到甚至超越了人类专家在多种视觉任务中的表现,前提是以监督方式进行训练(Yann等,2015)。然而,监督模型需要大量标注数据进行训练,这限制了其在医学图像任务中的应用,因为生成像素级标注的成本非常高。此外,监督模型在实际部署时,通常会面临来自不同于训练数据分布的目标域的新测试样本,从而导致推理性能下降。在此,“域”指的是由特定数据收集协议和背景定义的数据分布。在医学成像中,不同的域可能包含不同模态的数据(图1(a,d)),或者是相同模态但由不同站点或不同采集参数获取的数据。不同域数据之间常存在显著的分布差异和图像外观差异。例如,T1加权MRI和mDixon MRI扫描对于相同的解剖结构呈现出不同的对比度(图1(g,j))。这种训练域与测试域之间的偏差,被称为域漂移,在医学图像任务中很常见(Ben-David等,2010),这不仅妨碍了训练模型的共享,还需要跨数据集重新训练,限制了深度神经网络在预算和时间受限场景下的使用。

域适应(Domain Adaptation,DA) 是解决深度模型跨域泛化能力不足的一个极具潜力的方法。DA旨在将一个在标注的源域上训练的模型适配到未见的目标域,同时尽可能减少性能损失(Bateson等,2022a)。DA方法包括域对抗训练(Zeng等,2021)、域翻译(Yang等,2019)以及自训练技术(Zou等,2018)。这些方法需要在适配阶段访问源域数据,而源域训练数据由于隐私问题和计算限制,通常不可用。为此,提出了无需访问源数据的算法,这些算法利用先验信息或解剖学信息(Bateson等,2019,2021)。然而,这些方法在解剖学信息建模的灵活性方面存在局限性。因此,最近发展了无源域适应(SFDA) 方法以避免这些限制(Kundu等,2020;Liu等,2021)。然而,SFDA方法需要目标域的训练集和测试集,而这些数据集并不总是可以获得(Bateson等,2022b)。近期,一些新的方法被提出,如测试时域适应(TTA)(Wang等,2021a)、持续测试时域适应(CTTA)(Liang等,2023)和域泛化(DG)(Zhou等,2023)。TTA方法通过直接将预训练模型适配到目标测试集,提高了其实用性,无需目标域的训练集。CTTA方法与TTA类似,但更加关注长期持续适应过程中的鲁棒性。DG方法的运行方式则有所不同,其目标是在推理阶段不更新参数的情况下,从一个或多个源域生成一个更具泛化性的模型。

CTTA可用于对纵向研究中在不同时间点采集的医学图像进行分割,但适配的前提是源模型(通常通过经验风险最小化(ERM) 训练)在目标域上已经具有一定的性能(图1(a-f))。然而,ERM模型在严重的域漂移情况下往往难以提供足够的适应性能(图1(g-l))。可以利用领域知识设计预处理过程,以减少域间差距,使ERM模型在目标域上表现良好(Kim和Chai,2021)。然而,当一个预训练模型需要共享给多个终端用户以适配不同测试时数据分布时,设计预处理过程的工作量会显著增加。

为了解决这些问题,我们提出了一种通用CTTA框架,用于跨域医学图像分割。我们首先将形状感知特征学习引入现有模型,并使用DG技术在源域上训练它们。这消除了对目标域数据进行精细预处理的需求,并使源模型在大多数目标域上具有一定的性能,无论域漂移的严重程度如何。然后,我们使用不确定性加权多任务平均教师网络进行适应,生成更高精度和更精细轮廓的结果。此外,我们提出了一种新颖的多级跨任务正则化方案,通过在局部和全局尺度上增强由学生模型生成的形状表示和对应分割图之间的一致性,利用几何约束来改善性能。为实现持续适应性,在每一步适配中,将模型的一小部分权重随机重置为使用DG技术训练的初始状态和域不变特征状态。这一过程使模型在适配到新测试样本时,能够退回到合理的初始性能,从而避免过拟合并提高整体泛化能力。我们证明,该框架适用于ERM和DG训练的源模型,并在以下方面表现出色:(1)在五个不同的多站点或多模态数据集上执行具有挑战性的跨域分割任务时,优于多个最新的先进方法;(2)在各种场景下,比同类方法更适合CTTA。本文基于我们之前的工作,该工作已在2023年国际医学图像计算与计算机辅助干预会议(MICCAI)中早期接受(Zhu等,2023)。在本次研究中,我们从以下几个方面改进了会议论文:(1)我们的方法经过优化,结合了不确定性排序的多级跨任务一致性,进一步提升了性能;(2)我们在两个新的跨域分割任务上进行了实验,并包含了更深入的实验分析,以展示所提出框架的广泛适用性。

Aastract

摘要

Continual test-time adaptation (CTTA) aims to continuously adapt a source-trained model to a target domainwith minimal performance loss while assuming no access to the source data. Typically, source models aretrained with empirical risk minimization (ERM) and assumed to perform reasonably on the target domain toallow for further adaptation. However, ERM-trained models often fail to perform adequately on a severelydrifted target domain, resulting in unsatisfactory adaptation results. To tackle this issue, we propose ageneralizable CTTA framework. First, we incorporate domain-invariant shape modeling into the model andtrain it using domain-generalization (DG) techniques, promoting target-domain adaptability regardless of theseverity of the domain shift. Then, an uncertainty and shape-aware mean teacher network performs adaptationwith uncertainty-weighted pseudo-labels and shape information. As part of this process, a novel uncertaintyranked cross-task regularization scheme is proposed to impose consistency between segmentation maps andtheir corresponding shape representations, both produced by the student model, at the patch and global levelsto enhance performance further. Lastly, small portions of the model’s weights are stochastically reset to theinitial domain-generalized state at each adaptation step, preventing the model from ‘diving too deep’ into anyspecific test samples. The proposed method demonstrates strong continual adaptability and outp

持续测试时适应(Continual Test-Time Adaptation, CTTA)旨在在不访问源数据的情况下,持续将源域训练的模型适配到目标域,同时将性能损失降至最低。通常,源模型通过经验风险最小化(ERM)进行训练,并假设其在目标域上具有合理的性能以支持进一步的适应。然而,ERM训练的模型在目标域发生严重漂移时往往无法表现良好,导致适应结果不理想。

为了解决这一问题,我们提出了一种通用的CTTA框架。首先,我们将域不变的形状建模引入模型,并使用域泛化(Domain-Generalization, DG)技术进行训练,无论域漂移的严重程度如何,都能提升目标域的适应性。然后,使用一个基于不确定性和形状感知的平均教师网络,通过不确定性加权的伪标签和形状信息来进行适应。在此过程中,我们提出了一种新颖的不确定性排序跨任务正则化方案,该方案在局部(patch)和全局(global)水平上,通过对由学生模型生成的分割图和对应的形状表示施加一致性约束,进一步增强模型性能。

最后,在每一步适应中,模型的小部分权重会以随机方式重置到初始域泛化状态,防止模型过于“深陷”于任何特定测试样本中。这种方法能够避免模型过度拟合目标域中的某些特定数据。

所提出的方法展现出了强大的持续适应能力和跨域推广性。

Method

方法

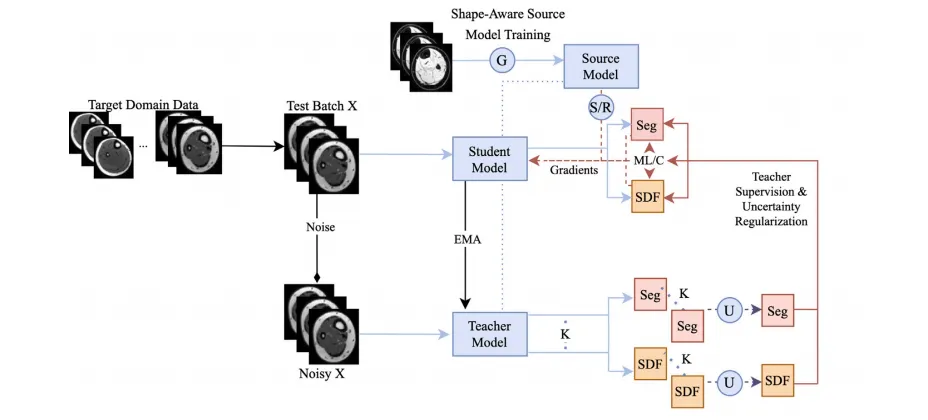

The proposed framework is a synergy of four components (Fig. 2)🙁1) shape-aware model training, (2) shape and uncertainty-aware meanteacher network, (3) multi-level cross-task consistency regularizationwith uncertainty ranking, and (4) domain-generalized stochastic weightrestoration for continual adaptation. Component (1) is used for modeltraining in the source domain, while (2) - (4) are used simultaneouslyfor CTTA. We describe each component in detail below.

提出的框架由四个组成部分协同构成(图2): (1) 形状感知模型训练; (2) 形状与不确定性感知的平均教师网络; (3) 带有不确定性排序的多级跨任务一致性正则化; (4) 面向持续适应的域泛化随机权重恢复。

组件(1)用于在源域中进行模型训练,而组件(2)-(4)则同时用于CTTA(持续测试时适应)。以下将详细描述每个组件的功能与设计。

Conclusion

结论

In this study, we presented a generalizable continual test-time adaptation framework for cross-domain segmentation of medical images.Our framework first trains a model on the source domain with domaininvariant shape features before adapting it to the target domain withuncertainty-weighted pseudo-labels and SDF maps. In addition, a novelmulti-level uncertainty-ranked cross-task consistency loss was proposedto further improve the performance of the student model. Our methodcan work with ERM or DG-trained source models and outperformedits peers on five cross-site/cross-domain segmentation tasks withoutshowing performance degradation as the adaptation progressed. Ourframework can continuously adapt the source model to unknown testdata online for the segmentation task, significantly reducing the costand bias associated with manual labeling.

在本研究中,我们提出了一种通用的持续测试时适应框架,用于医学图像的跨域分割。我们的框架首先在源域上训练模型,提取域不变的形状特征,然后利用基于不确定性加权的伪标签和符号距离场(SDF)映射将其适应到目标域。此外,我们还提出了一种新颖的多层次不确定性排序跨任务一致性损失,以进一步提升学生模型的性能。我们的方法可以与基于经验风险最小化(ERM)或领域泛化(DG)训练的源模型配合使用,并在五个跨站点/跨域分割任务中表现优于同类方法,同时在适应过程中没有表现出性能下降。我们的框架能够在线持续将源模型适应到未知的测试数据分割任务,大大降低了与手动标注相关的成本和偏差。

Results

结果

We evaluated our method and other benchmarking methods onfive datasets of different types of medical images. Each dataset has aunique distribution due to images collected from multiple sites and/ormodalities. We describe each dataset in paragraphs below.The first dataset is a cross-site binary prostate segmentation datasetfrom T2 -weighted MRI scans collected from six different sites where12–30 scans were available for each site (Bloch et al., 2015; Lemaîtreet al., 2015; Litjens et al., 2014).The second dataset is a cross-site and cross-modality multi-class(liver, left and right kidneys, and spleen) abdominal segmentationdataset between 30 CT and 20 MRI T2 -SPIR scans (Landman et al.,2015; Kavur et al., 2021).The third dataset is a same-site cross-modality muscle segmentationdataset with 13 lower-leg muscles and bones between 30 MRI T1 andmDixon scans (Zhu et al., 2021).The fourth dataset is a cross-site and cross-modality whole braintumor segmentation dataset (Menze et al., 2015) from over 100 MRI T2FLAIR and T2 -weighted scans. The dataset was collected by two centers.The last dataset is a cross-site and cross-modality heart segmentation dataset of four substructures (left ventricle, myocardium, leftatrium, and ascending aorta) from 20 MRI balanced steady-state freeprecession (b-SSFP) and CT scans (Wu and Zhuang, 2020).All scans were normalized to zero mean and unit variance beforebeing reformatted to 2D. Following other studies (Ouyang et al., 2023;Zhu et al., 2022; Wu and Zhuang, 2020), the prostate, brain tumor,and abdominal scans were resized to 192 × 192 pixels while the heartsubstructure and the muscle scans were center-cropped to 256 × 256and resized to 128 × 128 pixels, respectively. Lastly, a window of[−275, 125] in Houndsfield units was applied to CT scans and the top0.5% of the histogram of MRI scans was clipped.For the first dataset, we treated each site as the source domainand adapted to all other sites. The adaptation was performed in bothalphabetic and randomized orders. For example, the source model wasfirst trained on site A, then adapted to sites B, C, D, E, and F (alphabetic)and also adapted to sites F, E, D, B, and C (randomized). For otherdatasets, we first performed adaptation from modality A to B, then fromB to A. All experiments were performed in an online and continuoumanner: each test scan arrived randomly and was broken down intomultiple batches if needed. The model adapted itself to each batchbefore making a prediction. U-Net with an EfficientNet-b2 backbonewas used as the source model for all our experiments. We trained thesource model with ERM to provide a baseline model susceptible todomain shifts, and also with CiDG to produce another baseline thatis domain-generalized (i.e., resilient to domain shifts). Both baselinemodels were used by all the benchmarked adaptation methods toevaluate their efficacy in improving a baseline model’s target-domainperformance in various conditions. The Adam optimizer (Kingma andBa, 2015) was used with a learning rate of 0.001 and a batch sizeof 32. 𝛼 was set to 0.5 to achieve a balance between the local andglobal cross-task consistency terms, and 𝛽 was set to 1 to fully utilizethe self-regularization of the student model. 𝜅 was set to −1500 toapproximate the inverse transformation from the segmentation labelsto SDF maps, and 𝑝 to 0.01 to restore roughly 1% of model parametersback to their initial state with each gradient update. The model wasupdated for two steps (i.e., two gradient updates per test batch) forthe muscle, heart substructure, and brain tumor segmentation, fivesteps for the prostate segmentation, and ten steps for the abdominalsegmentation. The number of patches 𝑀 is determined by the slidingwindow size and its stride, which were empirically set to 48 and 32,respectively. 𝑁 was set to 12of 𝑀 to select the top 50% of patches withthe highest uncertainty scores to enforce effective local cross-task regularization while maintaining a reasonable computational efficiency.All hyperparameters except for the number of gradient update stepsare shared across all datasets. Our methods were implemented usingPyTorch 1.10.0 and trained on one Nvidia Tesla V100 GPU.2In addition, we calculated the final performance of each modelby using each model to re-predict the segmentation labels of all testsamples after the adaptation was completed. We then compared thefinal performance of each model against their running performanceto evaluate their ability for continual adaptation. Here, the term ‘running performance’ represents the cumulative evaluation metric scorescalculated from results produced by the model after each test batchwhile the adaptation was ongoing. On the other hand, the ‘final performance’ refers to the scores calculated from the results produced by the‘adapted’ model in one go after the adaptation had been completed. Theperformance was quantitatively evaluated by their volume-wise DiceSimilarity Coefficient (Dice, in %, the higher the better) and AverageSymmetric Surface Distance (ASSD, in mm, the lower the better).The statistical significance between results was determined with theWilcoxon signed-rank test at the pixel level. The significance thresholdwas set to 0.05.Lastly, we performed the adaptation at both the domain level(i.e., continuous adaptation) and single-image level to highlight thevalue of continuous adaptation. When performing the adaptation at thesingle-image level, the model was adapted to each test batch beforere-initializing its weights for the next test batch, whereas the model’sweights were continuously updated throughout the adaptation processfor the domain-level adaptation.

我们在五个不同类型的医学图像数据集上对提出的方法及基准方法进行了评估。每个数据集因图像来自多个站点和/或模态而具有独特的分布。以下是对每个数据集的描述:第一个数据集:这是一个跨站点的二分类前列腺分割数据集,来自六个不同站点的T2加权MRI扫描,每个站点提供了12-30个扫描样本(Bloch等,2015;Lemaître等,2015;Litjens等,2014)。

第二个数据集:这是一个跨站点和跨模态的多类别腹部分割数据集,包含肝脏、左右肾脏和脾脏的分割,数据来源包括30个CT扫描和20个MRI T2-SPIR扫描(Landman等,2015;Kavur等,2021)。

第三个数据集:这是一个同站点的跨模态肌肉分割数据集,包含小腿的13块肌肉和骨骼,数据来源为30个MRI T1和mDixon扫描(Zhu等,2021)。

第四个数据集:这是一个跨站点和跨模态的脑肿瘤分割数据集,包含超过100个MRI T2-FLAIR和T2加权扫描样本,数据由两个中心收集(Menze等,2015)。

第五个数据集:这是一个跨站点和跨模态的心脏分割数据集,包含四个子结构(左心室、心肌、左心房和升主动脉),数据来源于20个MRI平衡稳态自由进动(b-SSFP)和CT扫描(Wu和Zhuang,2020)。

数据预处理 所有扫描样本在转换为2D之前均进行标准化(零均值,单位方差)。前列腺、脑肿瘤和腹部扫描样本调整为192×192像素。心脏子结构扫描样本中心裁剪为256×256像素;肌肉扫描样本调整为128×128像素。对于CT扫描,应用Houndsfield单位范围[−275, 125]的窗口;MRI扫描的直方图最高0.5%部分被裁剪。实验设计对于前列腺数据集,将每个站点作为源域并适配到其他所有站点,适配以字母顺序(如A→B、C、D、E、F)和随机顺序(如A→F、E、D、B、C)进行。对于其他数据集,从模态A适配到模态B,然后从模态B适配到模态A。所有实验以在线和持续方式进行:每次测试扫描样本随机到达,如有需要将其分批,模型在每个批次预测前对其进行适配。模型与训练源模型:U-Net,骨干网络为EfficientNet-b2。源模型训练:通过ERM训练提供对域漂移敏感的基线模型,同时通过CiDG训练提供对域漂移弹性的泛化基线模型。优化器:Adam(Kingma和Ba,2015),学习率为0.001,批量大小为32。超参数α 设置为0.5,平衡局部和全局跨任务一致性项。β 设置为1,充分利用学生模型的自正则化能力。κ 设置为−1500,近似从分割标签到SDF(Signed Distance Function)映射的逆变换。

p 设置为0.01,以在每次梯度更新中随机恢复约1%的模型参数。每个测试批次的适配步数如下:肌肉、心脏子结构和脑肿瘤分割:2步前列腺分割:5步腹部分割:10步滑动窗口的大小和步幅分别设置为48和32,用于确定补丁数量M,并设置N为M的一半(选择不确定性分数最高的前50%补丁)。

实现与硬件 所有方法使用PyTorch 1.10.0实现,并在一块Nvidia Tesla V100 GPU上训练。性能评估 我们在适配完成后使用“适配后”模型对所有测试样本重新预测分割标签,并计算最终性能。运行性能:模型在适配过程中每次测试批次后产生的累计评估指标得分。最终性能:适配完成后一次性预测的结果评估得分。评估指标包括Dice相似系数(越高越好)和平均对称表面距离ASSD(越低越好)。统计显著性通过Wilcoxon符号秩检验在像素级确定,显著性阈值为0.05。适配模式 域级适配:模型权重在整个适配过程中不断更新。单图像级适配:模型对每个测试批次适配后重新初始化权重,再适配下一个批次。两种模式用于验证持续适应的价值。

Figure

图

Fig. 1. Demonstrations of different severities of domain shifts. Example 1: panels (a) and (d) are CT and MRI T2 scans containing the same abdominal structures, © and (f) aretheir segmentation ground truth labels, and (b) and (e) are cross-domain predictions for (a) and (d) by an ERM model trained on (d) and (a), respectively. Example 2: panels (g)and (j) are MRI T1 and mDixon scans containing the same musculoskeletal structures, (i) and (l) are their segmentation ground truth labels, and (h) and (k) are labels predictedfor (g) and (j) by an ERM model trained on (j) and (g), respectively. Arrows of the same color indicate the same anatomical structures across different domains.

图1. 不同域漂移严重程度的示例。 示例1:面板(a)和(d)分别为包含相同腹部结构的CT和MRI T2扫描图像,©和(f)是它们的分割真实标签,(b)和(e)是ERM模型分别在(d)和(a)上训练后对(a)和(d)的跨域预测结果。 示例2:面板(g)和(j)分别为包含相同骨骼肌结构的MRI T1和mDixon扫描图像,(i)和(l)是它们的分割真实标签,(h)和(k)是ERM模型分别在(j)和(g)上训练后对(g)和(j)的预测结果。 相同颜色的箭头指示在不同域中相同的解剖结构。

Fig. 2. Schematic of the proposed CTTA framework. The model is first trained on the source domain with shape-aware DG techniques for generalizable and adaptable baseline performance. Then, a multi-task uncertainty-weighted mean teacher network adapts the student model to an unseen and unlabeled target domain via uncertainty-weighted pseudopredictions produced by the teacher model. Meanwhile, the student model is regularized via an uncertainty-ranked multi-level loss to ensure the cross-task consistency betweenits SDF and segmentation predictions at various scales. Small portions of the model are also reset to their initial shape-aware state at each step to counter catastrophic forgettingand improve the robustness of continual adapt ation.

图2. 提议的CTTA框架示意图。模型首先通过形状感知的域泛化(DG)技术在源域上进行训练,以获得可泛化且适应性强的基线性能。随后,一个多任务不确定性加权的平均教师网络通过教师模型生成的不确定性加权伪预测,将学生模型适配到未见且无标注的目标域。同时,通过不确定性排序的多级损失对学生模型进行正则化,以确保其签名距离场(SDF)和分割预测在不同尺度上的跨任务一致性。在每一步适应中,模型的一小部分权重被重置为初始的形状感知状态,以抵消灾难性遗忘并提高持续适应的鲁棒性。

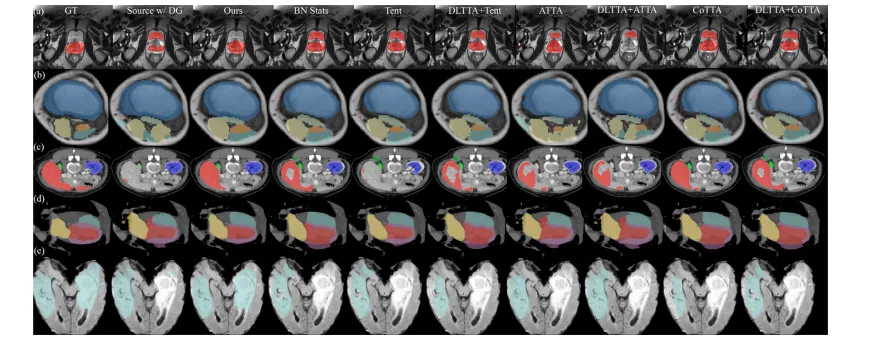

Fig. 3. Qualitative evaluation of benchmarked methods on the task of (a) cross-site MRI T2 prostate segmentation, (b) MRI mDixon → T1 muscle segmentation, © MRI T2 → CTabdominal segmentation, (d) MRI b-SSFP → CT heart substructure segmentation, and (e) MRI T2 → FLAIR brain tumor segmentation. Best viewed when zoomed in

图3. 基准方法在以下任务中的定性评估: (a) 跨站点MRI T2前列腺分割, (b) MRI mDixon → T1肌肉分割, © MRI T2 → CT腹部分割, (d) MRI b-SSFP → CT心脏子结构分割, (e) MRI T2 → FLAIR脑肿瘤分割。

Table

表

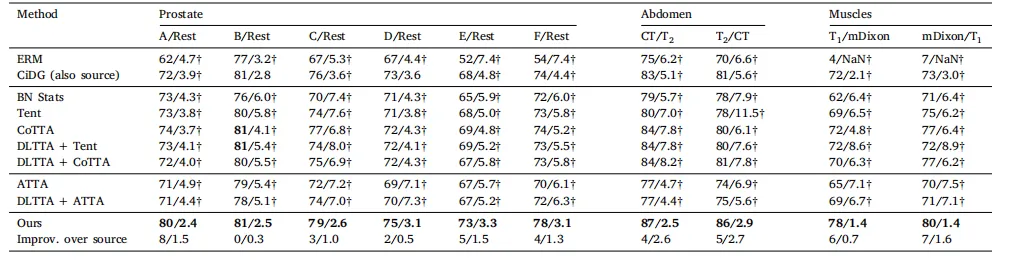

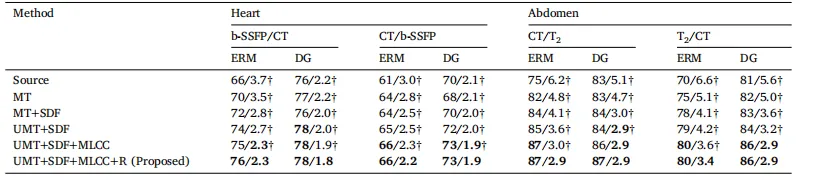

Table 1 Comparison on four datasets. denotes the results obtained by reproduced based on the publicly codes. The best and second-best results are marked in bold and underlined, respectively

表 1 四个数据集上的比较。表示基于公开代码复现所得的结果。最佳结果和次优结果分别用加粗和下划线标注。

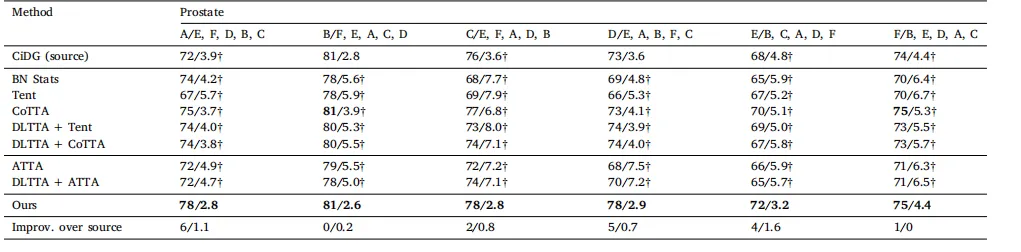

Table 2Quantitative evaluation of all methods with CiDG-trained source model on the cross-site prostate task. The adaptation was performed with randomized orders (e.g., A/E, F, D, B, Cmeans the source model was trained on site A and adapted to target domains in order of E, F, D, B, and C, and so on). The same source model (CiDG) was used by all adaptationmethods. Results are shown as Dice (%)/ASSD (mm). The second row shows source/target domains. Source, general, and medical methods are placed into their respective groups.† denotes statistical significance between the Dice/ASSD score of a method and that of our method (𝑝 < 0.05). Best results in bold

表2 使用CiDG训练的源模型在跨站点前列腺任务中对所有方法的定量评估。 适配按随机顺序进行(例如,A/E, F, D, B, C表示源模型在站点A上训练,并按顺序适配到目标域E、F、D、B和C,依此类推)。所有适配方法均使用相同的源模型(CiDG)。结果以Dice(%)/ASSD(mm)表示。第二行显示源域/目标域。源方法、通用方法和医学方法分别归入各自的组别。†表示方法的Dice/ASSD得分与本方法的得分之间具有统计显著性(𝑝 < 0.05)。粗体表示最佳结果。

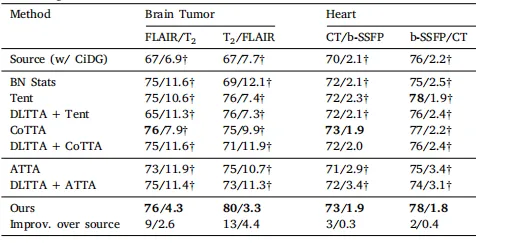

Table 3Quantitative evaluation of benchmarked methods (with CiDG source model) on the brain tumor and heart substructure segmentation tasks. The same source model (CiDG)was used by all benchmarked methods. Results are shown in form of Dice (%)/ASSD(mm). † denotes statistical significance between the Dice/ASSD score of a methodand that of our method (𝑝 < 0.05). Source and target domains were presented as source/target in the second row. Best results are in bold

表3 基准方法(使用CiDG源模型)在脑肿瘤和心脏子结构分割任务中的定量评估。 所有基准方法均使用相同的源模型(CiDG)。结果以Dice(%)/ASSD(mm)的形式表示。† 表示某方法的Dice/ASSD得分与我们方法的得分之间具有统计显著性(𝑝 < 0.05)。源域和目标域在第二行以"源域/目标域"形式呈现。粗体表示最佳结果。

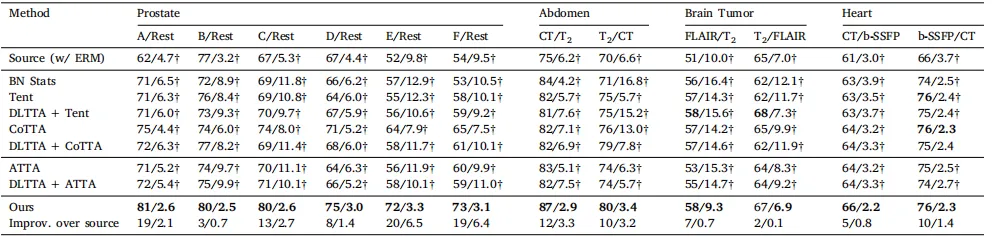

Table 4Quantitative evaluation of benchmarked methods (with ERM source model) on the prostate, abdominal, brain tumor, and heart substructure segmentation tasks. The same sourcemodel was used by all methods. Muscle segmentation was skipped due to the poor performance of the ERM-trained source model (Dice < 8%, ASSD NaN). Results were shownin form of Dice (%)/ASSD (mm). Source and target domains were presented as source/target in the second row. † denotes statistical significance between the Dice/ASSD score ofa method and that of our method (𝑝 < 0.05). Best results were inbold

表 4 使用 ERM 源模型在前列腺、腹部、脑肿瘤和心脏子结构分割任务上对基准方法的定量评估。所有方法均使用相同的源模型。由于ERM训练的源模型在肌肉分割任务上的表现较差(Dice < 8%,ASSD 为 NaN),肌肉分割任务被跳过。结果以 Dice (%) / ASSD (mm) 的形式表示。源域和目标域在第二行中以源域/目标域的形式呈现。† 表示该方法的 Dice/ASSD 分数与本文方法之间具有统计显著性 (𝑝 < 0.05)。最佳结果用粗体标出。

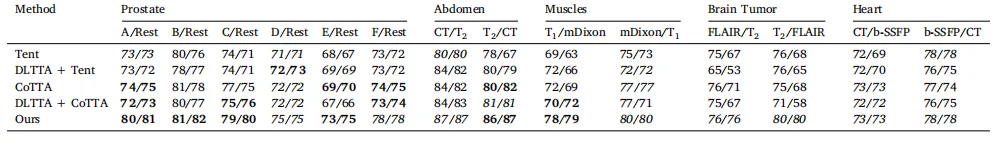

Table 5Comparison of the continual test-time adaptability of each model (with CiDG source model). The same source model was used by all benchmarked methods. ATTA was excludedfrom the comparison as it was designed for episodic test-time adaptation. Each cell presented each model’s running/final Dice scores (in %) for each scenario. Equal (in italics)or higher final Dice scores (in bold) indicate a model’s suitability for continual test-time adaptation.

表 5 比较每个模型(使用 CiDG 源模型)的持续测试时适应性。所有基准方法均使用相同的源模型。由于 ATTA 被设计用于情节性测试时适应(episodic test-time adaptation),因此未纳入比较。每个单元格表示每个模型在每种场景下的运行/最终 Dice 分数(以 % 为单位)。相等的(斜体)或更高的最终 Dice 分数(粗体)表明该模型适合持续测试时适应(continual test-time adaptation)。

Table 6Ablation study of the proposed method with both ERM and DG-trained source models on representative datasets. Each component is graduallyadded to demonstrate its contribution. Here, MT denotes mean teacher, SDF refers to the signed distance field, UMT stands for uncertainty-awaremean teacher, MLCC indicates multi-level cross-task consistency, and R is short for weight reset. † denotes statistical significance between theDice/ASSD score of an ablated and that of the proposed method (𝑝 < 0.05). Best results marked in bold

表 6 关于所提出方法的消融研究,使用 ERM 和 DG 训练的源模型在代表性数据集上进行。逐步添加每个组件以展示其贡献。表中,MT 表示均值教师(mean teacher),SDF 表示符号距离场(signed distance field),UMT 表示基于不确定性的均值教师(uncertainty-aware mean teacher),MLCC 表示多层次跨任务一致性(multi-level cross-task consistency),R 表示权重重置(weight reset)。† 表示消融方法的 Dice/ASSD 分数与所提方法之间具有统计显著性 (𝑝 < 0.05)。最佳结果用 粗体 标出。

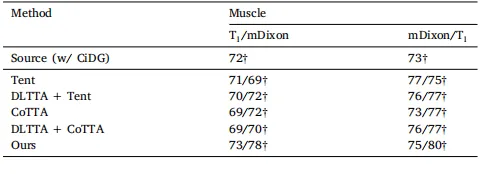

Table 7Quantitative evaluation of benchmarked methods (with CiDG source model) on themuscle segmentation with single-image and continuous adaptation. Results are shown asDices /Dicec , where Dices denotes the Dice score achieved with single image adaptationand Dicec is the Dice score achieved with continuous adaptation.

表 7 在肌肉分割任务上使用单图像和连续适应时对基准方法(使用 CiDG 源模型)的定量评估。结果以 Dices / Dicec 的形式显示,其中 Dices 表示通过单图像适应获得的 Dice 分数,Dicec 表示通过连续适应获得的 Dice 分数。

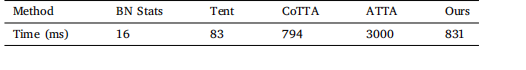

Table 8Comparison of batch-wise inference time of benchmarked adaptation methods on theabdominal segmentation task

表 8 基准适应方法在腹部分割任务上的逐批推理时间比较。